Nvidia Computex 2025: GB300 will be launched in the third quarter of this year, and the full liquid

01 Blackwell system is in full production, GB300 will be launched in the third quarterOn the morning of May 19, NVIDIA CEO Huang Renxun delivered a keynote speech at COMPUTEX 2025. Huang Renxun pointed out that NVIDIA has grown from a chip company to an AI infrastructure company, and the roadmap it released is crucial to the planning of global data centers. He predicted that AI will be everywhere, AI infrastructure will become a necessity like electricity and the Internet, and today's data centers are evolving into "AI factories." At the event, Huang Renxun also released a series of blockbuster products, technology updates and strategic cooperation.

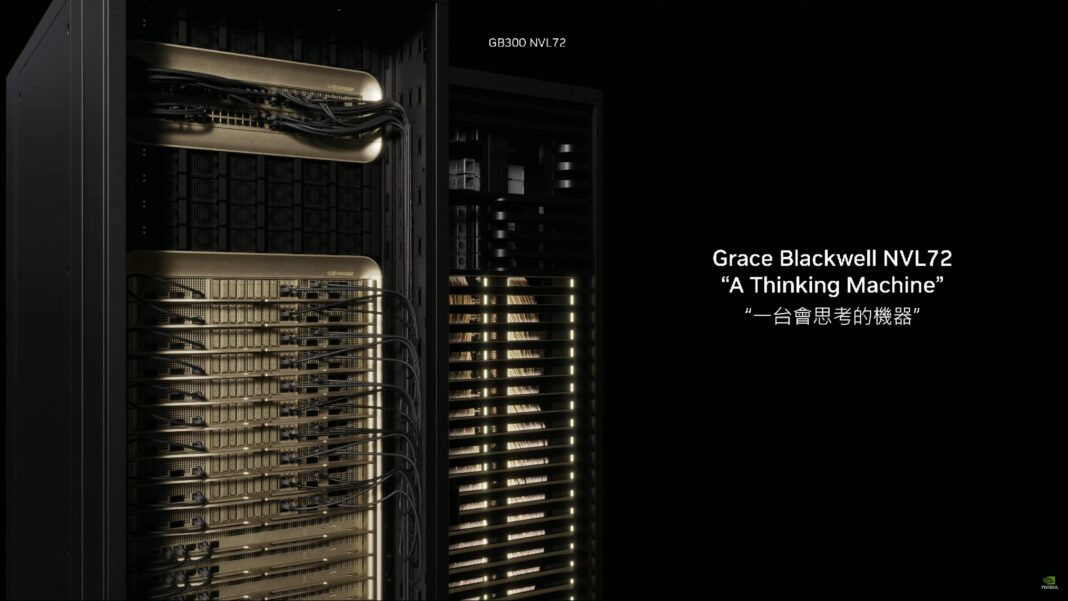

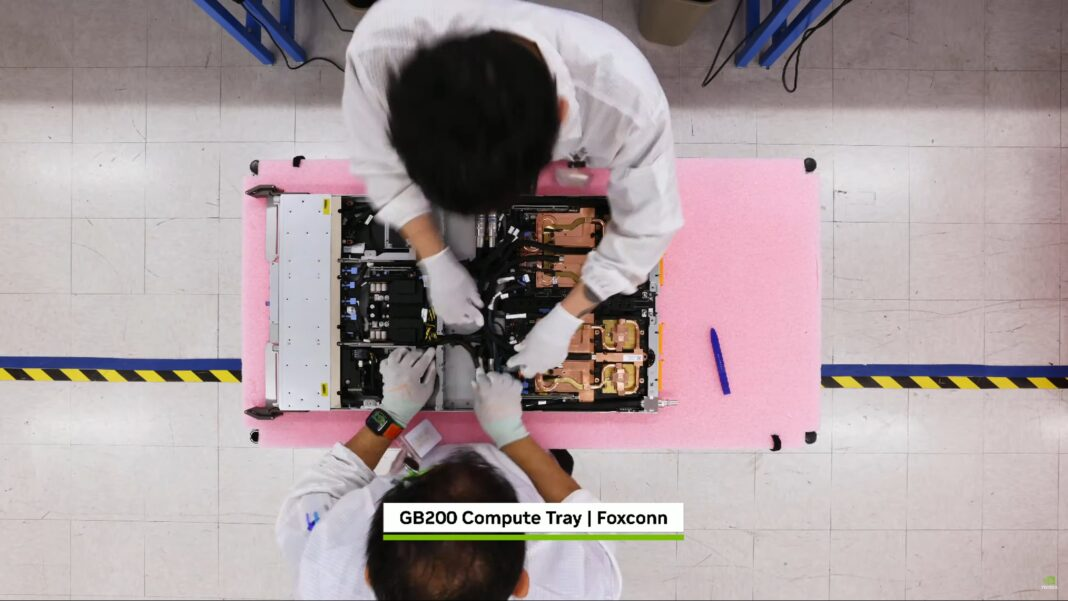

Huang Renxun said that the Grace Blackwell system is in full production, but at the same time, we can also say that this process is full of great challenges. Although the HGX-based Blackwell system has been in full production since the end of last year and has been available since February this year, we are only now starting to put all Grace Blackwell systems online. The CoreWeave platform has been equipped with this system for several weeks, and many cloud service providers have also begun to use it.

Grace Blackwell 300

Huang Renxun promised that in the third quarter of this year, the performance of the platform will continue to improve year by year, and every year is like a precise beat. In the third quarter of this year, it will be upgraded to Grace Blackwell GB300.

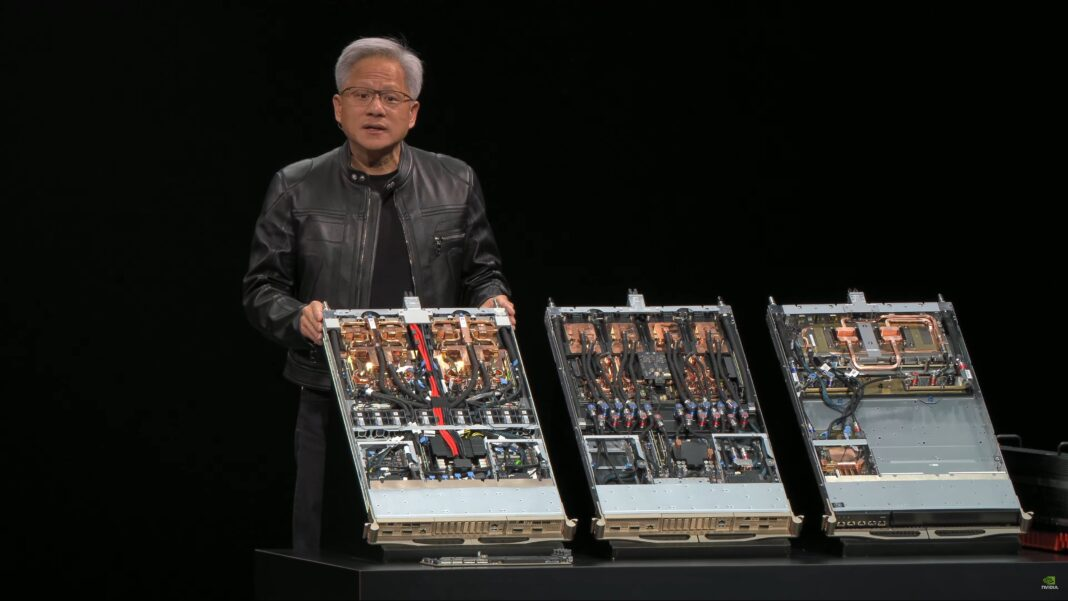

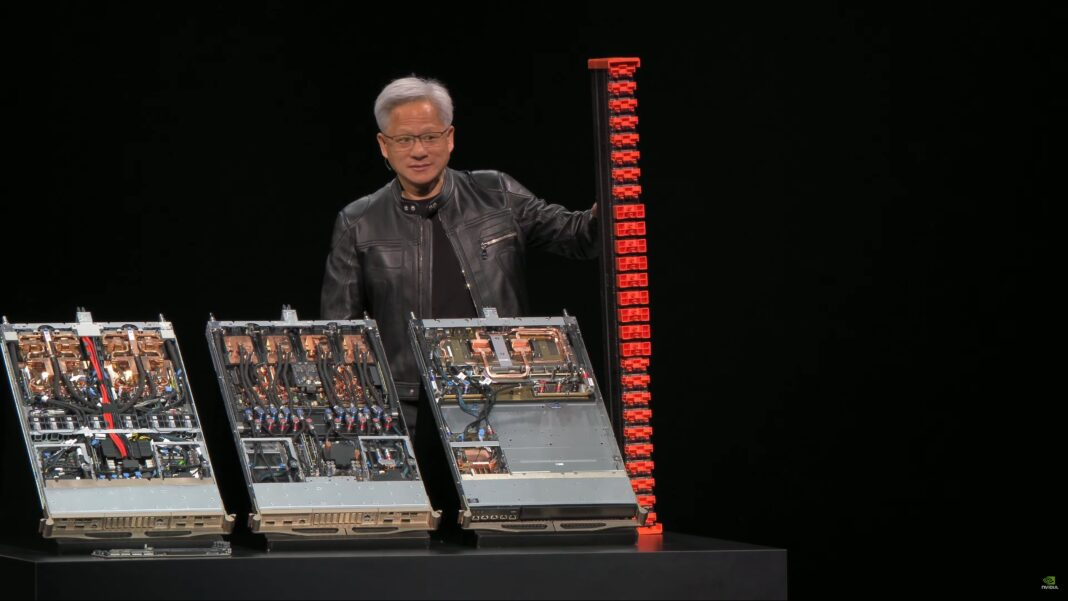

Below is the physical product of B300. Please note that its center part is now fully liquid-cooled, but other external interfaces and dimensions remain unchanged, so it can be directly plugged into the same existing system and chassis.

GB200, GB300, NVLink switch

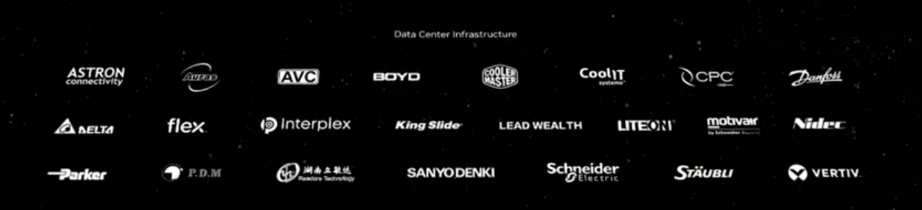

GB300's cold plate is custom-made by Cooler Master, AVC, Auras and Delta.

Grace BlackwellGB200 liquid cooling tray

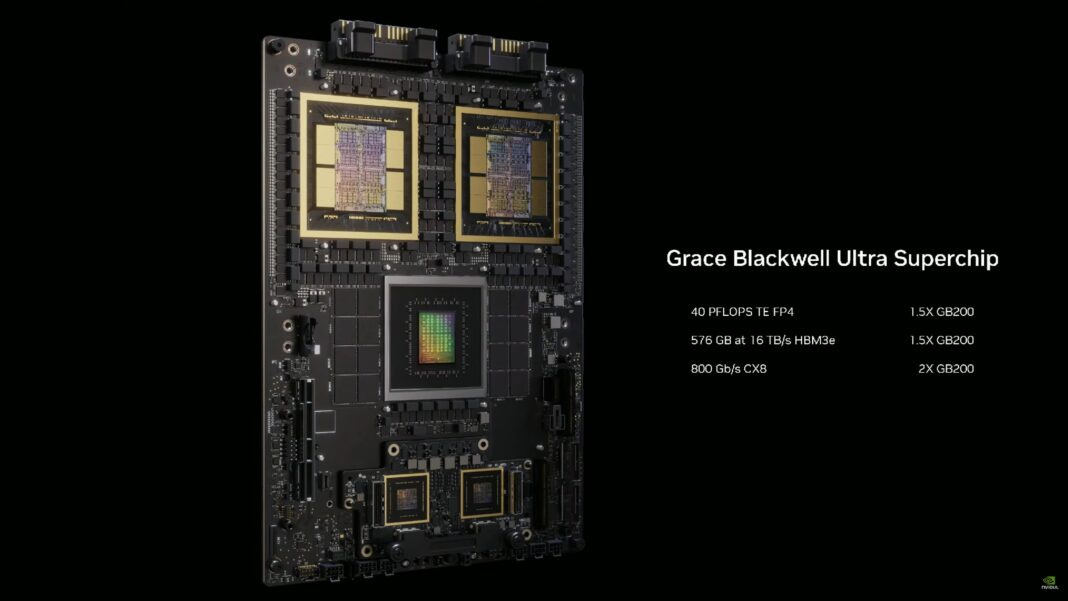

Huang Renxun also said that the Grace Blackwell GB300 system has improved its inference performance by 1.5 times compared with the previous one. Training performance is basically the same, but inference performance has increased by 1.5 times, with a computing power of up to 40 petaflops, which is roughly equivalent to the overall performance of the 2018 Sierra supercomputer - you know, the Sierra supercomputer is equipped with 18,000 Volta GPUs. Today, this one node alone is enough to replace that entire supercomputer, and the performance has increased by an astonishing 4,000 times in just six years! This is the ultimate embodiment of Moore's Law. Remember, I mentioned earlier that NVIDIA can improve AI computing performance by about a million times every decade (the power consumption of future chips will also increase significantly, and the heat dissipation requirements will also increase dramatically), and we are still moving steadily on this development trajectory.

NVIDIA GB300

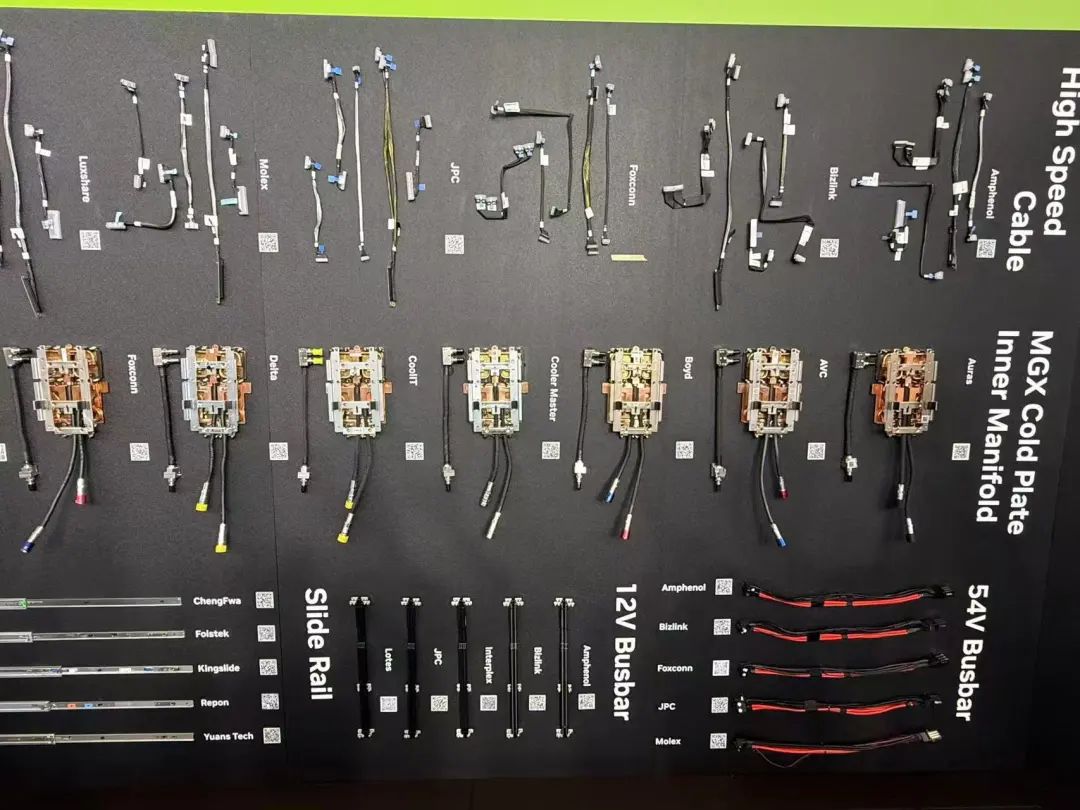

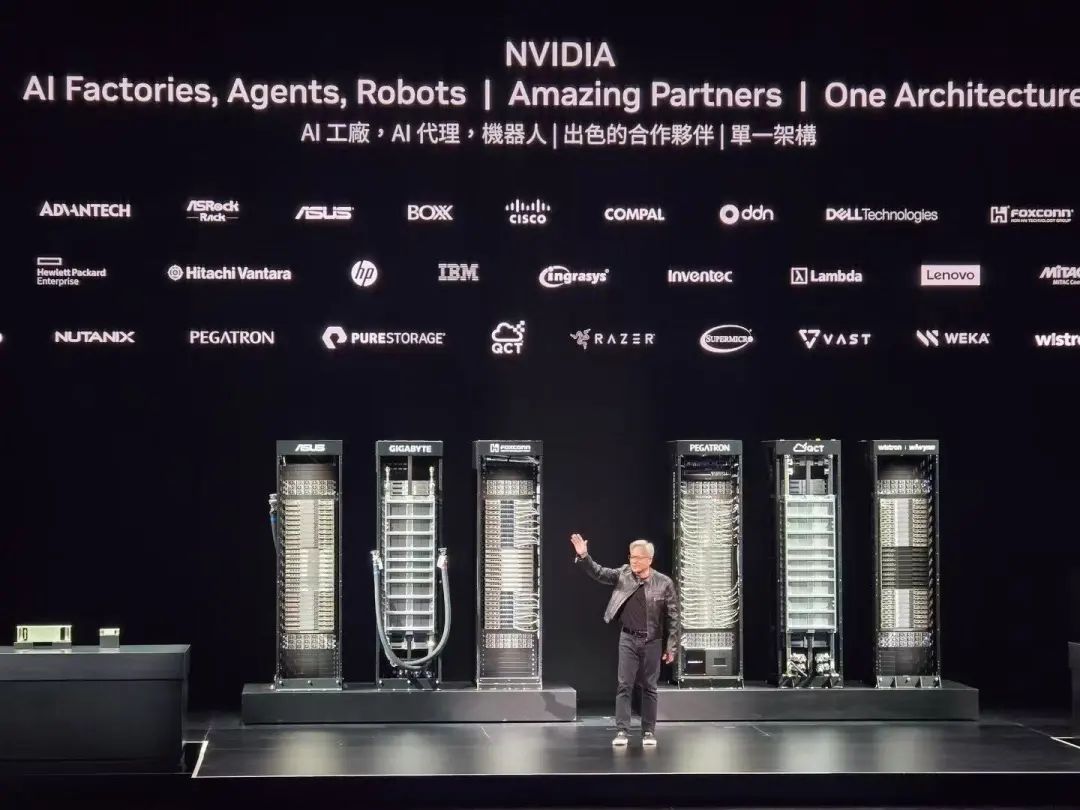

NVIDIA Server Cooperation Ecosystem

NVIDIA Liquid Cooling & Data Center Infrastructure Cooperation Ecosystem

02

NVIDIA AI Factory Plan, Liquid Cooling Demand Will Increase Significantly

Almost all products built by NVIDIA are of large scale. The fundamental reason is that we are not just building traditional data centers and servers, we are committed to building AI factories. Take CoreWeave and Oracle Cloud as examples. The rack power density in these facilities is so high that they must be spaced farther apart to effectively distribute and manage the huge energy density. But the core idea is that we are building AI factories, not traditional data centers.

For example, XAI's Colossus factory, and the Stargate project, a magnificent project covering 4 million square feet and requiring up to 1 gigawatt (GW) of power, will require a lot of liquid cooling in the future. Imagine that such a 1 GW AI factory may cost as much as $60 billion to $80 billion in total investment. Of this huge investment, the electronic equipment and systems that make up the computing core themselves may account for $40 billion to $50 billion.